Inter-observer agreement and reliability assessment for observational studies of clinical work - ScienceDirect

Risk Factors for Multidrug-Resistant Tuberculosis among Patients with Pulmonary Tuberculosis at the Central Chest Institute of Thailand | PLOS ONE

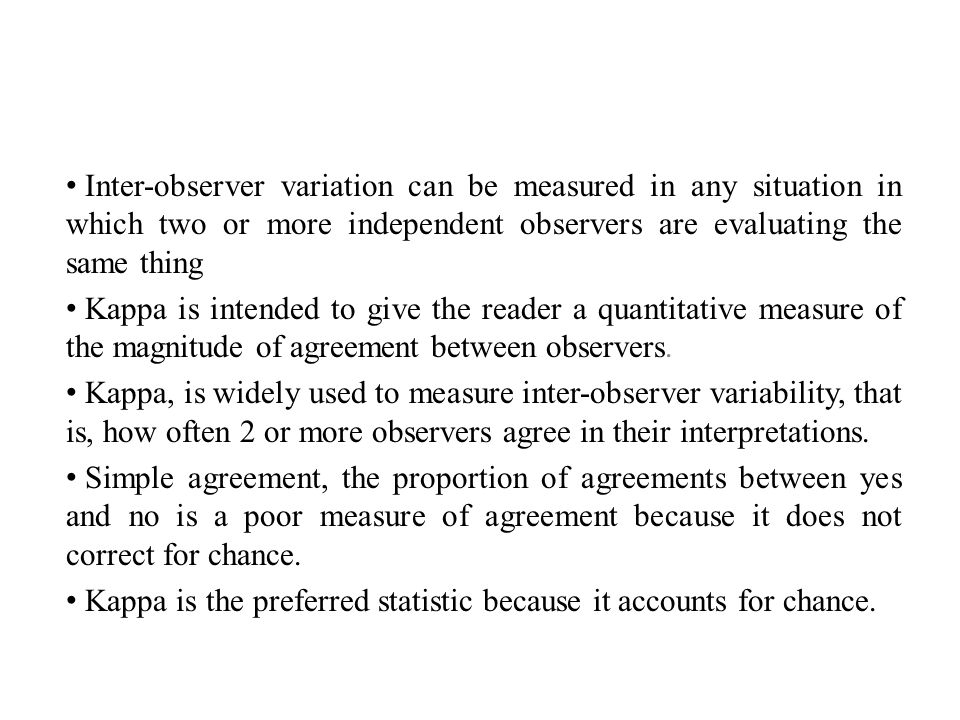

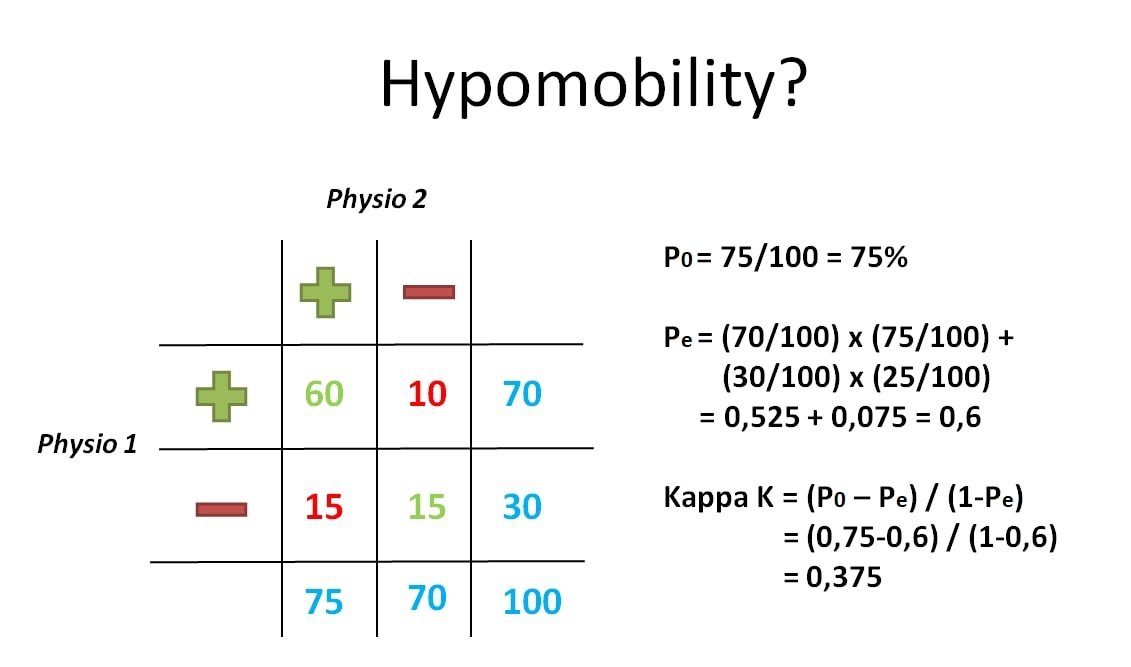

Inter-observer variation can be measured in any situation in which two or more independent observers are evaluating the same thing Kappa is intended to. - ppt download

PDF) The Kappa Statistic in Reliability Studies: Use, Interpretation, and Sample Size Requirements Perspective | mitz ser - Academia.edu

![PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/6d3768fde2a9dbf78644f0a817d4470c836e60b7/3-Table1-1.png)

PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar

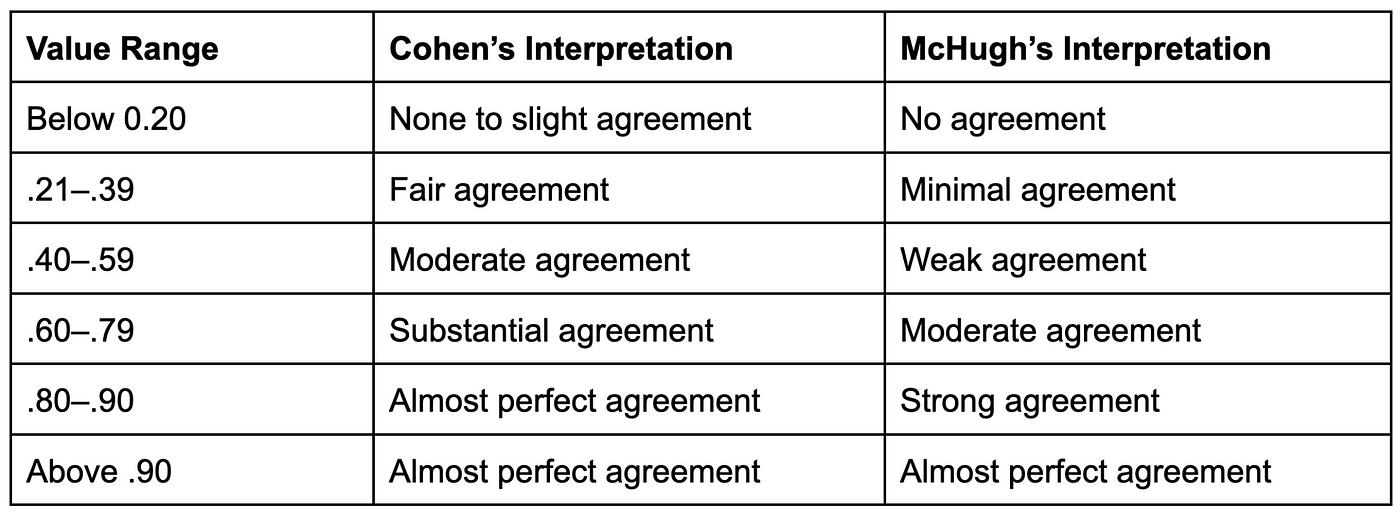

![PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e45dcfc0a65096bdc5b19d00e4243df089b19579/3-Table2-1.png)

![PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e45dcfc0a65096bdc5b19d00e4243df089b19579/3-Table3-1.png)